本文记录一下发生在2023年10月份的一次线上问题的排查过程。一开始是收到生产环境网关下大量请求404的告警,但是没过几分钟自己恢复了。因为报404的都是业务真实在用的接口,于是开始排查原因。最后发现是运维同事新增了一个单独看起来挺正常的 ingress 资源配置,却因为没有考虑当前 k8s 集群下 ingress 资源现状,导致 rules 匹配出了意外。希望大家也引以为戒。

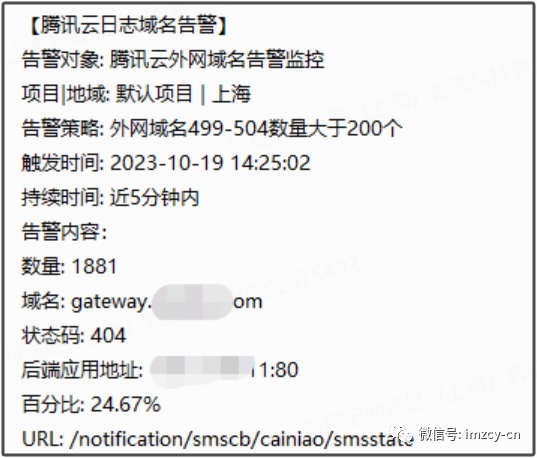

收到告警

企业微信群收到告警,网关服务对应域名请求存在大量 404 。到 Grafana 查看请求状态分析的监控面板,确实这会每分钟2000的404了,并且好像都是通知服务对应的接口。因为都是 404 ,没有 5xx 的状态码,所以一开始想着是不是这个接口已经下掉了?找对应开发确认被告知该接口是当前业务上真实在用的接口。那是谁偷偷发版发出问题了?喊了一嗓子都说没动、没发版。正说着呢,有人说恢复了,去查看确实 404 确实没了,好吧,不用着急了,慢慢查吧。。。

检查发版:查看通知服务对应 pod 的状态,根据 AGE 列的值可以看出都是已经创建了 39 小时的 pod ,确实没发版。RESTARTS 列的值都为 0 ,说明 pod 刚才也没异常重启。

[root@imzcy ~]# kubectl -n prod get pods -o wide |grep "NAME\|notificationproducer"

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

deployment-java-notificationproducer-v2-997bfd4dc-5jnr6 1/1 Running 0 39h 192.18.54.57 192.18.52.11 <none> <none>

deployment-java-notificationproducer-v2-997bfd4dc-dvwtk 1/1 Running 0 39h 192.18.54.251 192.18.52.12 <none> <none>

deployment-java-notificationproducer-v2-997bfd4dc-mdxvb 1/1 Running 0 39h 192.18.54.64 192.18.52.11 <none> <none>

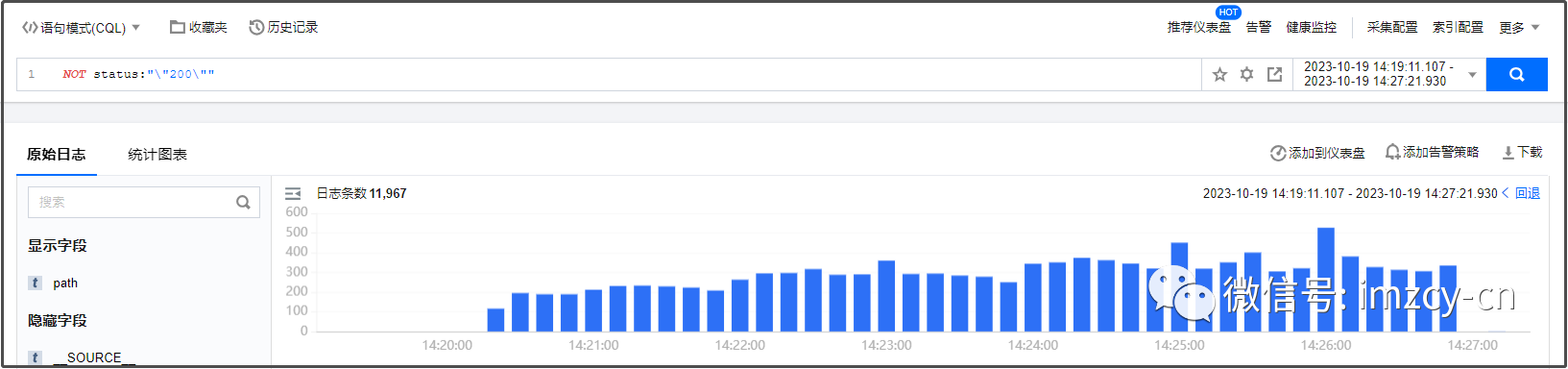

分析日志

检索状态为非 200 的请求

按请求 path 分类发现不止 notification 一个服务,包括 paycenter 服务也有问题

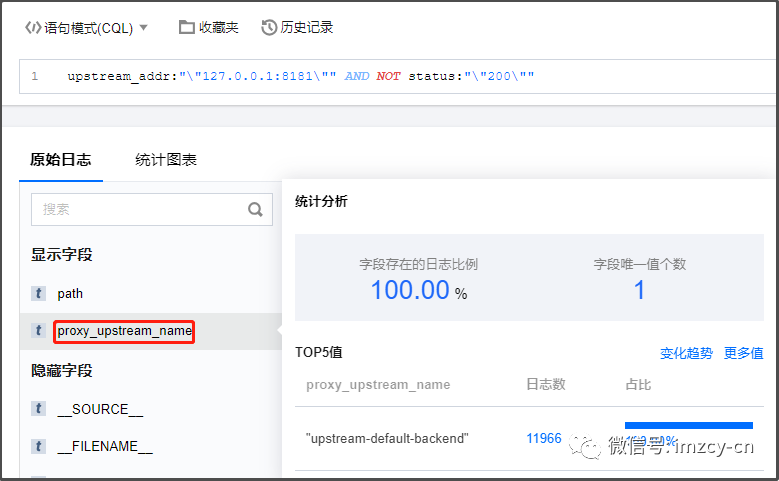

按 upstream_addr 分组,我擦,怎么后端地址都是 127.0.0.1:8181 这个!

查看 upstream 对应的服务名,咋是 upstream-default-backend 这个呀!难道是 ingress-nginx-controller 有啥情况?

根据 ingress_addr 分类发现两个节点都有异常日志

检查 ingress-nginx-controller 状态

确认 controller 对应 pod 状态 - AGE 最少为 2d6h ,说明没有重建。RESTARTS 为0,说明也没有重启。

[root@imzcy ~]# kubectl -n prod-ingress-nginx-wan get pods -A |grep "NAME\|nginx-controller"

NAMESPACE NAME READY STATUS RESTARTS AGE

prod-ingress-nginx-wan ingress-nginx-controller-hsp8w 1/1 Running 0 2d6h

prod-ingress-nginx-wan ingress-nginx-controller-xw4bh 1/1 Running 0 57d查看 controller 运行日志 - 首先看时间,10点的日志就不用看了,14:20 和故障开始时间差不多了。

看如下 L3 行开始的日志内容是发现了名为 prod/ing-java-wechat-dm 这个 ingress 资源导致配置 reload 了。并且在 14:26 因配置发生更改 controller 又重载了配置。看来是动配置了。和另一个运维同事确认,他说刚才确实新建了一个 ingress 但是已经删掉了。。。

[root@imzcy ~]# kubectl -n prod-ingress-nginx-wan logs ingress-nginx-controller-hsp8w --tail=10

I1019 10:10:53.007585 11 event.go:285] Event(v1.ObjectReference{Kind:"Pod", Namespace:"prod-ingress-nginx-wan", Name:"ingress-nginx-controller-hsp8w", UID:"02f6a383-f965-4a9f-9380-b40e2a819d61", APIVersion:"v1", ResourceVersion:"49397301372", FieldPath:""}): type: 'Normal' reason: 'RELOAD' NGINX reload triggered due to a change in configuration

I1019 14:20:22.116245 11 store.go:430] "Found valid IngressClass" ingress="prod/ing-java-wechat-dm" ingressclass="prod-nginx-wan"

I1019 14:20:22.117263 11 controller.go:168] "Configuration changes detected, backend reload required"

I1019 14:20:22.118805 11 event.go:285] Event(v1.ObjectReference{Kind:"Ingress", Namespace:"prod", Name:"ing-java-wechat-dm", UID:"45930fad-fb93-40a0-9b55-31ceaded1714", APIVersion:"networking.k8s.io/v1", ResourceVersion:"49576864925", FieldPath:""}): type: 'Normal' reason: 'Sync' Scheduled for sync

I1019 14:20:22.253860 11 controller.go:185] "Backend successfully reloaded"

I1019 14:20:22.254591 11 event.go:285] Event(v1.ObjectReference{Kind:"Pod", Namespace:"prod-ingress-nginx-wan", Name:"ingress-nginx-controller-hsp8w", UID:"02f6a383-f965-4a9f-9380-b40e2a819d61", APIVersion:"v1", ResourceVersion:"49397301372", FieldPath:""}): type: 'Normal' reason: 'RELOAD' NGINX reload triggered due to a change in configuration

I1019 14:20:45.571822 11 event.go:285] Event(v1.ObjectReference{Kind:"Ingress", Namespace:"prod", Name:"ing-java-wechat-dm", UID:"45930fad-fb93-40a0-9b55-31ceaded1714", APIVersion:"networking.k8s.io/v1", ResourceVersion:"49576888560", FieldPath:""}): type: 'Normal' reason: 'Sync' Scheduled for sync

I1019 14:26:59.078674 11 controller.go:168] "Configuration changes detected, backend reload required"

I1019 14:26:59.206761 11 controller.go:185] "Backend successfully reloaded"

I1019 14:26:59.207456 11 event.go:285] Event(v1.ObjectReference{Kind:"Pod", Namespace:"prod-ingress-nginx-wan", Name:"ingress-nginx-controller-hsp8w", UID:"02f6a383-f965-4a9f-9380-b40e2a819d61", APIVersion:"v1", ResourceVersion:"49397301372", FieldPath:""}): type: 'Normal' reason: 'RELOAD' NGINX reload triggered due to a change in configuration查看 K8S 审计日志

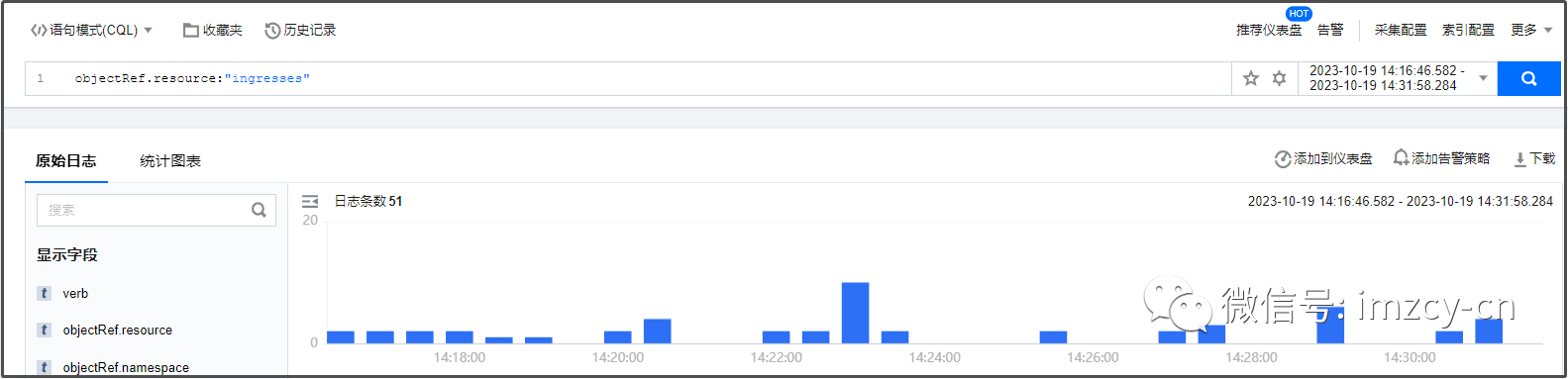

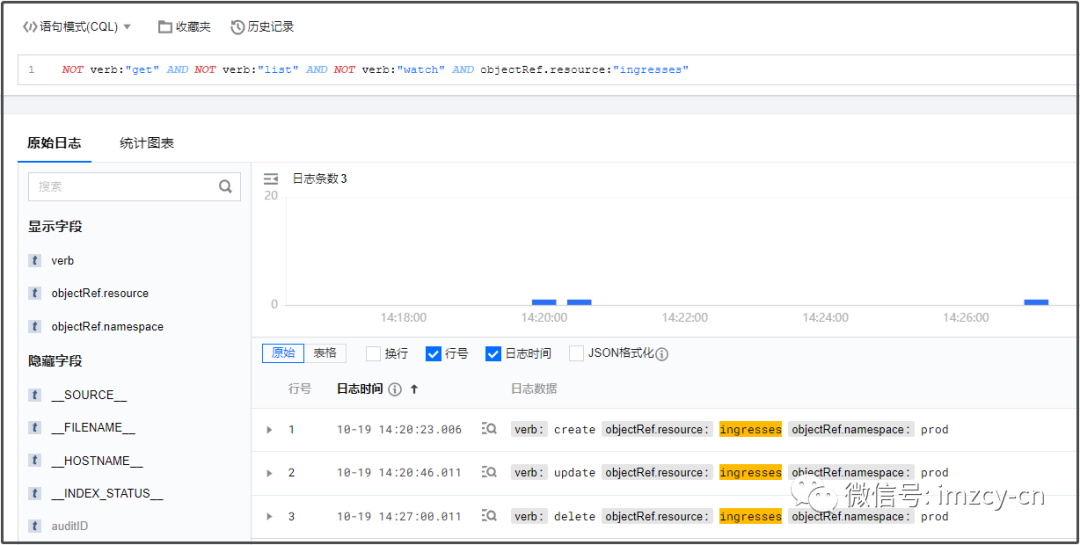

筛选资源类型为 ingress 发现有51条日志

按 verb 分组后,找到了!

排除读的动作,看到三条日志:

14:20:23 创建的 ingress 资源

14:20:46 更新了 ingress 资源

14:27:00 删除了 ingress 资源

看这个 ingress 资源的创建时间和删除时间,和故障开始和结束时间基本是对上了,大概率就是这个导致的了。查看 create 详情。将日志中requestObject字段内容展开后得到如下json内容。

{

"kind": "Ingress",

"apiVersion": "networking.k8s.io/v1",

"metadata": {

"name": "ing-java-wechat-dm",

"namespace": "prod",

"creationTimestamp": null,

"annotations": {

"nginx.ingress.kubernetes.io/cors-allow-headers": "DNT,X-CustomHeader,Keep-Alive,User-Agent,X-Requested-With,If-Modified-Since,Cache-Control,Content-Type,Authorization,Origin,X-Requested-With,Content-Type,Accept,traceparent",

"nginx.ingress.kubernetes.io/cors-allow-methods": "PUT, GET, POST, OPTIONS",

"nginx.ingress.kubernetes.io/cors-allow-origin": "*",

"nginx.ingress.kubernetes.io/enable-cors": "true"

}

},

"spec": {

"ingressClassName": "prod-nginx-wan",

"rules": [

{

"host": "gateway.example.com",

"http": {

"paths": [

{

"path": "/wechat",

"pathType": "Prefix",

"backend": {

"service": {

"name": "svc-java-wechat",

"port": {

"number": 80

}

}

}

}

]

}

}

]

},

"status": {

"loadBalancer": {}

}

}

# 查看 update 内容请求体

{

"kind": "Ingress",

"apiVersion": "networking.k8s.io/v1",

"metadata": {

"name": "ing-java-wechat-dm",

"namespace": "prod",

"selfLink": "/apis/networking.k8s.io/v1/namespaces/prod/ingresses/ing-java-wechat-dm",

"uid": "45930fad-fb93-40a0-9b55-31ceaded1714",

"resourceVersion": "49576864925",

"generation": 1,

"creationTimestamp": "2023-10-19T06:20:22Z",

"annotations": {

"nginx.ingress.kubernetes.io/cors-allow-headers": "DNT,X-CustomHeader,Keep-Alive,User-Agent,X-Requested-With,If-Modified-Since,Cache-Control,Content-Type,Authorization,Origin,X-Requested-With,Content-Type,Accept,traceparent",

"nginx.ingress.kubernetes.io/cors-allow-methods": "PUT, GET, POST, OPTIONS",

"nginx.ingress.kubernetes.io/cors-allow-origin": "*",

"nginx.ingress.kubernetes.io/enable-cors": "true"

},

"managedFields": [

{

"manager": "kubectl-create",

"operation": "Update",

"apiVersion": "networking.k8s.io/v1",

"time": "2023-10-19T06:20:22Z",

"fieldsType": "FieldsV1",

"fieldsV1": {

"f:metadata": {

"f:annotations": {

".": {},

"f:nginx.ingress.kubernetes.io/cors-allow-headers": {},

"f:nginx.ingress.kubernetes.io/cors-allow-methods": {},

"f:nginx.ingress.kubernetes.io/cors-allow-origin": {},

"f:nginx.ingress.kubernetes.io/enable-cors": {}

}

},

"f:spec": {

"f:ingressClassName": {},

"f:rules": {}

}

}

}

]

},

"spec": {

"ingressClassName": "prod-nginx-wan",

"rules": [

{

"host": "gateway.example.com",

"http": {

"paths": [

{

"path": "/wechat",

"pathType": "Prefix",

"backend": {

"service": {

"name": "svc-java-wechat",

"port": {

"number": 80

}

}

}

}

]

}

}

]

},

"status": {

"loadBalancer": {

"ingress": [

{

"ip": "192.18.52.4"

},

{

"ip": "192.18.53.11"

}

]

}

}

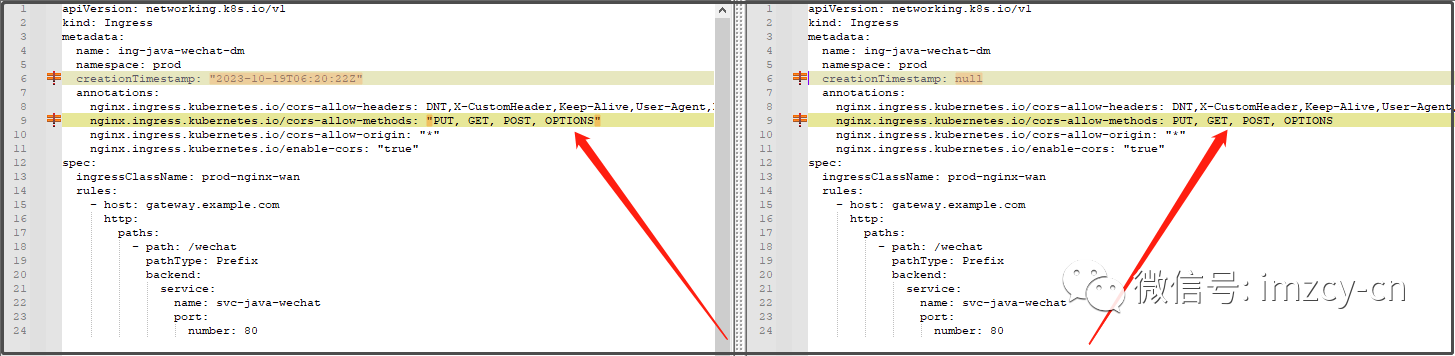

}将上面 json 转换为 yaml 格式后,对比 create 和 update 的内容发现更新只是 nginx.ingress.kubernetes.io/cors-allow-methods 的值有没有双引号的区别,双引号本身应该关系不大。

确认新增加的 ingress 是否会引起故障

查看当前 ingress 列表

[root@imzcy ~]# kubectl -n prod get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

ing-express-butlerapp prod-nginx-wan butlerapp.example.com 192.18.52.4,192.18.53.11 80 31d

ing-express-signinapp prod-nginx-wan signinapp.example.com 192.18.52.4,192.18.53.11 80 83d

ing-java-logisticlogic prod-nginx-wan * 192.18.52.4,192.18.53.11 80 2d1h

ing-java-notification-producer-biz prod-nginx-wan * 192.18.52.4,192.18.53.11 80 2d1h

ing-java-wechat prod-nginx-wan * 192.18.52.4,192.18.53.11 80 2d3h

ing-paycentertask prod-nginx-wan * 192.18.52.4,192.18.53.11 80 2d1h

ing-paycenterweb prod-nginx-wan * 192.18.52.4,192.18.53.11 80 2d1h当前通知服务 ingress 配置

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

nginx.ingress.kubernetes.io/cors-allow-headers: DNT,X-CustomHeader,Keep-Alive,User-Agent,X-Requested-With,If-Modified-Since,Cache-Control,Content-Type,Authorization,Origin,X-Requested-With,Content-Type,Accept,traceparent

nginx.ingress.kubernetes.io/cors-allow-methods: PUT, GET, POST, OPTIONS

nginx.ingress.kubernetes.io/cors-allow-origin: '*'

nginx.ingress.kubernetes.io/enable-cors: "true"

name: ing-java-notification-producer-biz

namespace: prod

spec:

ingressClassName: prod-nginx-wan

rules:

- http:

paths:

- backend:

service:

name: svc-java-notification-producer-biz

port:

number: 80

path: /notification

pathType: Prefix

#今天新建的 ingress 资源配置

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ing-java-wechat-dm

namespace: prod

creationTimestamp: "2023-10-19T06:20:22Z"

annotations:

nginx.ingress.kubernetes.io/cors-allow-headers: DNT,X-CustomHeader,Keep-Alive,User-Agent,X-Requested-With,If-Modified-Since,Cache-Control,Content-Type,Authorization,Origin,X-Requested-With,Content-Type,Accept,traceparent

nginx.ingress.kubernetes.io/cors-allow-methods: "PUT, GET, POST, OPTIONS"

nginx.ingress.kubernetes.io/cors-allow-origin: "*"

nginx.ingress.kubernetes.io/enable-cors: "true"

spec:

ingressClassName: prod-nginx-wan

rules:

- host: gateway.example.com

http:

paths:

- path: /wechat

pathType: Prefix

backend:

service:

name: svc-java-wechat

port:

number: 80嗯??? 不会是新增加的 ingress 显式指定了 host 配置,导致所有访问 gateway.example.com 这个域名的请求都被指向了 svc-java-wechat 这个服务了吧? 真有可能啊

ingress 没有指定 host 的话,好像就会成为默认规则去匹配所有没被其他规则匹配的请求,因为上面我创建的两个 dotnet 项目的 ingress 显式指定了其他独立的域名,而所有其他 java 项目都没有指定 host 并且本身都认为自己使用的 gateway.example.com 这个域名,都是默认规则大家级别都一样所以刚好相安无事(只要没有冲突的一级路径),现在新增加的 ingress 显式指定了 gateway.example.com 这个域名,域名直接匹配上了并且没有其他 ingress 同样指定 gateway.example.com 这个域名监听其他路径,那真的会将所有流量都匹配过去。

由于新增的 ingress 资源 host 指定了 gateway.example.com 域名但是 path 只匹配了 /wechat 这个路径,那么本来类似通过 /notification 访问通知服务的其他请求就会匹配失败,ingress-nginx-controller 就将这些请求转发给了默认后端(upstream-default-backend),对,就是这样。

到 ingress-nginx-controller 上一级 LB 上检查日志,可以看到故障期间,server_name 为 gateway域名的请求状态码正常为200的,只有 /wechat/ 开头的接口。果然,石锤了!!!

单独看挺正常的一个 ingress 配置,却导致了线上故障,真的是有点一言难尽。不过话说回来,如果这个新增的 ingress 提前在灰度环境做了测试,肯定能验证出来的;或者说如果还是按照之前那些 java 项目 ingress 资源一样没有显式指定 host 绑定 gateway.example.com 域名也不会出现这个问题。归根到底还是对生产环境没有敬畏之心

最后两点:

1、对要操作的对象一定要熟悉其工作原理,并且结合当前的实际环境去判断会不会有影响。

2、一定要先在灰度环境做验证!

文章链接地址:https://wojc.cn/archives/1531.html

本站文章除注明[转载|引用|来源|来自],均为本站原创内容,转载前请注明出处

留言